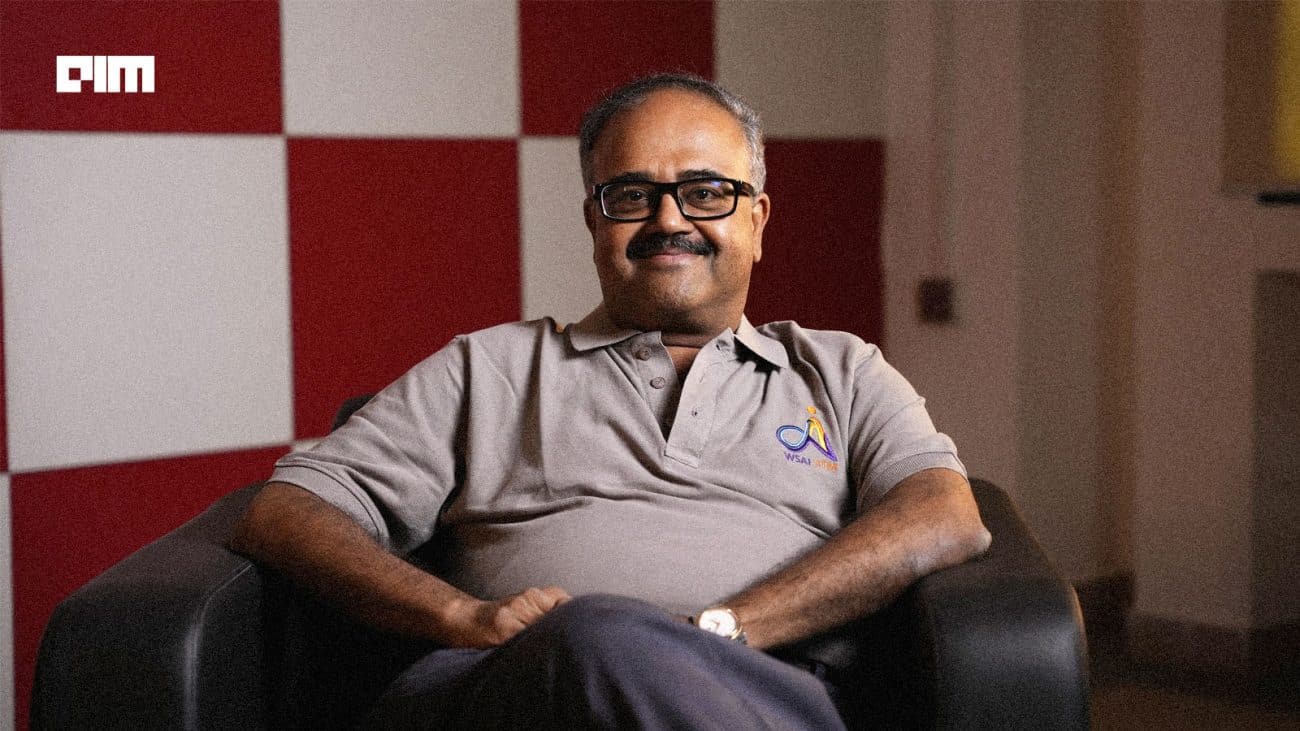

IIT Madras professor Balaraman Ravindran has cast doubt on the government’s ambitious plan to develop an indigenous large language model (LLM) under the IndiaAI mission within six months.

In an exclusive conversation with AIM, Ravindran indicated that the timeline may be too short to build a top-notch model and doubted whether the initiative can truly position India on the global AI stage.

“I think six months is too aggressive a timeline for us to really build super capable models. What we are probably going to get are right or decent models; we are not going to shake the world,” he said.

This comes in the backdrop of Union minister Ashwini Vaishnaw expecting India’s LLM to be ready within the next ten months. The government allocated ₹2,000 crore in the Union Budget 2025 for the IndiaAI mission and has so far received 67 proposals to develop indigenous AI foundational models, including 22 LLMs, for India’s diverse linguistic and cultural landscape.

Although startups are still uncertain about the timeline, the Ministry of Electronics and Information Technology (MeitY) said it will continue accepting proposals till the 15th of each month for the next six months, or until it receives a sufficient number—whichever comes first.

The government on Thursday launched AI Kosha and Compute Portal under the IndiaAI Mission. These initiatives are part of the IndiaAI Mission to provide startups and researchers with access to datasets and high-performance computing resources for artificial intelligence development.

Taking academia as the reference point, Ravindran, who once taught Perplexity CEO Aravind Srinivas, stressed how short six months are. “[In that time] one semester happens—assignments and exams happen. Unless the research has already been happening, you can’t expect an LLM in six months.”

Meanwhile, IIT Madras, in partnership with a startup, has also sent a proposal for the IndiaAI mission. “Whoever submits a proposal has to set up as a company because six months of delivering something requires you to have a startup mindset,” the professor said.

He opined that the government should have invested more in academia. “You cannot really do cutting-edge automotive research in academia alone,” he said, adding that it has come to a point where if one wants to do cutting-edge AI research, one needs to partner with the industry.

Ravindran acknowledged that the current plan of the IndiaAI mission is better than the original plan of buying a lot of GPUs directly from NVIDIA. “Now, at least, we have these cloud vendors who can hopefully provide more subsidised things,” he said.

The IndiaAI mission will feature 18,000+ GPUs through public-private partnerships with players like Jio Platforms, NxtGen Data Center, Locuz Enterprise, E2E Networks, CtrlS DataCenters, CMS Computers, Orient Technologies, Tata Communications, Vensysco, and Yotta Data Services.

AIM found that AI startups such as Sarvam AI, Krutrim, CoRover.ai, TurboML, LossFunk and IIIT Hyderabad had also applied under the proposal.

Notably, Soket AI recently launched Project EKA, which seeks to unite AI researchers, engineers, and institutions across the country to develop multilingual, high-efficiency AI models that cater to India’s needs while competing globally.

Similarly, TurboML, a San Francisco-based AI company, has launched a new initiative to bring together Indian-origin AI researchers from around the world. The goal is to develop foundation models to support the country’s future in AI.

“We’re collaborating with the best minds to build foundation models that will power India’s AI future”, wrote TurboML CEO Siddharth Bhatia on X. In his post, he mentioned discussing the initiative in a meeting with IT minister Ashwini Vaishnaw.

Bhatia told AIM that his company plans to build an LLM under $12 million.

Indian SaaS company Zoho has also entered the race. In a recent interview, group chief executive officer Shailesh Davey said the company plans to release two foundational models by the end of the year. Zoho is also developing foundational models for Indian languages but has not set a timeline on this yet.

More Investment and Compute Needed

Ravindran said that another advantage of the mission is that it will create a pool of trained engineers who can work with more researchers in the future and develop better solutions. However, he is still not convinced that India can build a DeepSeek-like model in six months but is hopeful that something fruitful can come in the next year and a half. “If you build DeepSeek in a year and a half, it has to be something very, very different,” he said.

The professor pointed out that India has a dearth of corporations investing money in fundamental research. “They are happy to do applied research,” he said.

He explained that China’s DeepSeek didn’t come out of nowhere and that research had been going on for a long period. “As far as China is concerned, there were at least 5-6 companies, maybe 10, that were at the level of DeepSeek, and they could just launch anytime,” Ravindran said.

He even suggested that the timing of DeepSeek’s release seemed strategic. “They stayed silent until the US announced the Stargate initiative.”

This, he said, was a clear response to the developments in the US. “Stargate said it needed $500 billion, so the Chinese countered with, ‘We can do it in $4 or $6 billion’,” he said, suggesting a political angle to the whole affair.

“It’s not like DeepSeek V3 just came out of the blue. If you look at a lot of things that they used in DeepSeek V3, many of the components are there in DeepSeek V2. They added RL fine-tuning instead of supervised fine-tuning in the end,” Ravindran said.

He added that American companies were trying to create a moat around themselves by saying that you need a lot of money to get to the same level as us, and that’s perhaps one of the reasons they didn’t want to explore cheaper alternatives.

Ravindran said that when discussing India needing more compute, he means reaching a sustainable level, not an ‘Elon Musk-level’ of compute. Notably, Musk’s xAI trained Grok 3 on 200,000 GPUs. The IIT professor believes India needs to look beyond Transformer-based autoregressive training and even reinforcement learning-based fine-tuning. “I think they will soon be hitting a ceiling. People who worked on symbolic AI were way ahead of their time in how they thought about intelligence,” he concluded.