While companies like DeepSeek, Alibaba, and Meta host their open-weight models on cloud-based chatbots, the true value lies in the ability to run these models locally. This approach eliminates the reliance on cloud infrastructure.

Running these models not only alleviates privacy concerns and censorship restrictions but also lets developers fine-tune these models and tailor them to specific use cases.

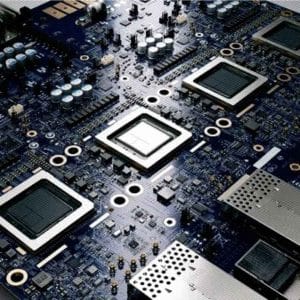

While the best results and outputs would necessitate a large model trained on a large corpus of data, it would also demand high computing power and expensive hardware rigs to deploy locally.

Notably, John Leimgruber, a software engineer from the United States with two years of experience in engineering, managed to bypass the need for expensive GPUs by hosting the massive, 671 billion parameter DeepSeek-R1 model. He ran a quantised version of the model on a fast NVMe SSD.

In a conversation with AIM, Leimgruber explained what made it possible.

MLA, MoE, and Native 8-Bit Weights for the Win

Leimgruber used a quantised, non-distilled version of the model, developed by Unsloth.ai—a 2.51 bits-per-parameter model, which he said retained good quality despite being compressed to just 212 GB.

However, the model is natively built on 8 bits, which makes it quite efficient by default.

“For starters, each of those 671B parameters is just 8 bits for a total of 671 GB file size. Compare that to Llama-3.1-405B which requires 16 bits per parameter for a total of 810 GB file size,” Leimgruber added.

Leimgruber ran the model after disabling his NVIDIA RTX 3090 Ti GPU on his gaming rig, with 96 GB of RAM, and 24 GB of VRAM.

He explained that the “secret trick” is to load only the KV cache into RAM, while allowing llama.cpp to handle the model files using its default behaviour—memory-mapping (mmap) them directly from a fast NVMe SSD. “The rest of your system RAM acts as disk cache for the active weights,” he added.

This means that most of the model runs directly from the NVMe SSD, with the system memory speeding up the access to the model.

Leimgruber also clarified that it won’t affect the SSD’s read or write cycle lifetime, and the model is accessed via memory mapping and not swap memory, where data is frequently written and erased on disk.

He could run the model with a little over two tokens per second. To put that in perspective, Microsoft recently revealed that the distilled version of the DeepSeek-R1 14B model gave eight tokens per second. In contrast, AI models deployed on the cloud like ChatGPT or Claude can output 50-60 tokens per second.

However, he also suggested that having a single GPU with 16-24 GB of memory is still better than not having a GPU at all. “This is because the attention layers and kv-cache calculations can be run on the GPU to take advantage of optimisations like CUDA (Compute Unified Device Architecture) graphs while the bulk of the models MoE (Mixture of Experts) weights run in system RAM,” he said.

Leimgruber provided detailed benchmarks and examples of generations in a GitHub post.

This is largely possible due to DeepSeek’s architecture, besides its native 8-bit weights. DeepSeek’s MoE architecture means that it only has 37B parameters active at a time while generating tokens.

“This is much more efficient than a traditional dense model like GPT-3 or Llama-3.1-405B because each token requires less computations,” Leimgruber said.

Moreover, the Multi-Head Latent Attention (MLA) allows for longer context chats, as it performs calculations on a compressed latent space instead of fully uncompressed context like most other LLMs.

All things considered, the best way for most home users to run a model locally on a desktop without a GPU is to take advantage of applications like Ollama and much smaller, distilled versions of DeepSeek-R1. In particular, the distilled variant of Alibaba’s Qwen-2.5-32B model, which is fine-tuned on the DeepSeek-R1 model to produce reasoning-like outputs. A user recently published a tutorial on GitHub on how to deploy this model locally using Inferless.

For even smaller versions, LinuxConfig.Org posted a tutorial to deploy the 7B version of the DeepSeek-R1 without a GPU. Similarly, DataCamp published a detailed tutorial on deploying the model on Windows, and Mac machines using ollama.